When we decided that Daala would use a lapped transform, we knew it would have an impact on intra prediction. Last year, Monty demonstrated a new frequency domain intra prediction scheme that would solve the problem. Fast-forward one year and it turned out not to be the case for a few reasons:

Considering the limited performance of our intra prediction and the development of Haar DC (multi-level coding of the DC), we recently decided to disable the current frequency-domain intra predictor. We started looking at other options to solve what is currently Daala's main weakness when it comes to compressing still-image and keyframes: noise/ringing on directional patterns and edges. Eventually, I started to think of a radical alternative to intra prediction: designing a limited codec that can only code directional patterns and edges, and then leave the residual to the current encoder. This first-pass intra paint codec operates entirely in the spatial (pixel) domain.

Most intra predictors work along with the rest of the codec to predict one block at a time from previous blocks. Any residual error from this prediction is transformed and coded as part of the current block. The process repeats, forming a traditional coding loop. Intra paint is a codec of its own that sits in front of the main codec and coding loop. It does not use or depend on the residual error, it simply passes the error along to the main codec. It's even possible to code an entire image with intra paint alone.

The algorithm works in four steps:

1. Block sizes: Divide the image into blocks of fixed or variable size. Variable-size blocks

make it possible to use large blocks on long, continuous edges and small blocks

where edges intersect or change direction.

2. Direction Search: Determine which direction best matches the pattern in each block. The direction

needs to be signaled, but it is cheap to code because it is usually highly-correlated

across adjacent blocks.

3. Boundary pixels: Determine the pixel values at block boundaries that optimally match

the image using the directions found in step 2. This is where most of the

bits would normally be spent. We can use intra prediction from other block

boundaries to help save bits in this step.

4. Painting: For each block, use all four boundaries as well as the direction to

paint the pixels inside the block. The boundary pixels encoded in step 3 are not taken directly

from the original image, but rather optimized to give the best prediction of the original

image at this stage.

Block discontinuities are avoided by blending

within blocks based on the distance to each boundary.

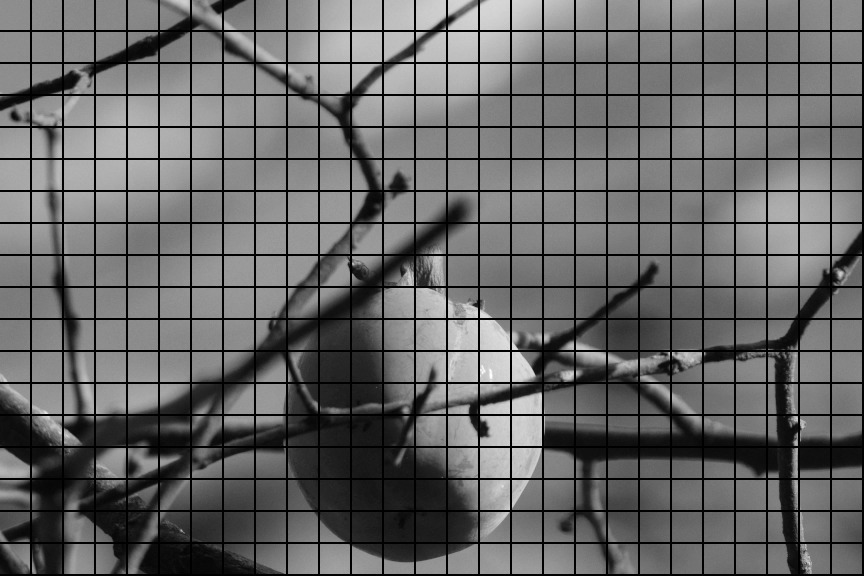

Without quantization, the paint algorithm by itself can make some pretty pictures:

Just for fun (there's absolutely no compression application to this), this is what paint looks like when applied independently to each frame of a video.

Looks great, doesn't it? Surely enough to significantly improve Daala's performance on directional patterns and edges. Well, not really. Turns out it's not so easy to code image data twice and still come out ahead. More specifically, the approach works fine in regions of the image that are well represented by our directional model, but it bleeds away too many bits in other areas that are not so well predicted. And because of lapping and block size alignment issues, we cannot just turn it on and off when we want. Yet another neat idea that turns out to fail due to practical considerations.

But not all hope is lost yet. There is potentially another way to make use of the paint approach beyond GIMP plugins. It can be used as a post-processing step to attenuate the coding artefacts. The idea is that there are regions of the image (e.g. close to edges) where the painted version looks better than the coded image, especially at low bitrate. Of course, it would be bad to replace the entire image with the painted version. We would only want to do use it in places where it improves quality. We also want to avoid spending too many bits on the painting process or on signaling which are the pixels that benefit.

First, the painting part is easy. Instead of running the paint algorithm on the original image and sending information about the directions and edges, we can simply run the algorithm on the decoded image. The result is not nearly as good, but hey we have a free lunch here.

The second part is trickier. How can we know which pixels should be replaced with the painted pixels and which ones are best unmodified? Intuitively, we see that in regions of the image that have clear directional patterns, the painted image should be much closer to the decoded image than in regions with unpredictable texture. Not only that, but knowing the quality at which the image was coded, we have an idea of the amount of quantization noise that was introduced, which is also the magnitude of the difference we can expect between decoded image and its painted version.

The actual equations look disturbingly similar to other equations I have worked on before: those for noise suppression in audio signals. After about a page of least squares derivations, we get this very simple expression for the optimal weight to apply to the painted image:

where Q is the image quality (quantization step size), σ2 is the mean squared distance between decoded image and the painted image, and α is a tunable parameter between 0 and 1. For each boundary pixel, we compute a weight along the direction of each adjacent block. This gives us more control than computing the weight at the block level. Now, there are still cases where we might get it wrong and where our deringing would hurt image quality by blurring texture. For these cases, there is also a per-block on/off switch that needs to be signaled. It's the only information that appears to be worth signaling for now.

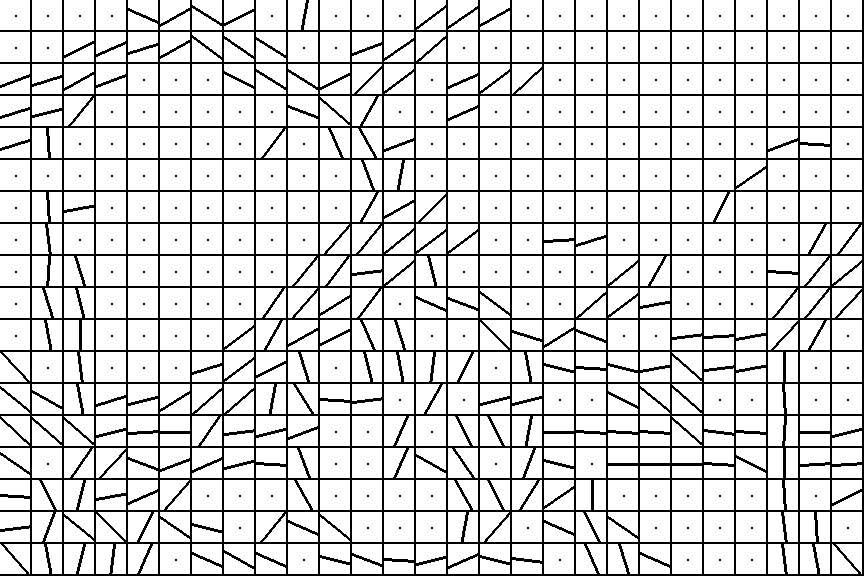

This is what the images at various steps of the deringing process look like. This time we use 8x8 blocks.

| Deringing based on the paint algorithm |

|

Deringing filter steps from the coded image to the final processed image. Notice how the painting process reduces jaggedness of the branches, while blurring the details of the large branch at the bottom. Weighing brings back some of the details and the on/off switch at the end almost completely eliminates the remaining blurring.

Putting chroma back into the image (deringing is only applied to luma for now), this is the effect of the deringing filter

To give an idea of what bit-rates we're talking about, the image above is a 864x576 section of a larger 1296x864 image that was encoded with Daala in just 15 kB. With the deringing filter signaling, it should end up at around 16 kB. As a comparison, here's what JPEG looks like at the same rate.

So all is good and well integrated in the Daala codebase, right? Well, not yet, because there's a minor detail I haven't mentioned: complexity. The current version is horribly complex, as it computes dozens of divisions and square roots per pixel. Total computation time is about 3 seconds for a single image, most of which happening in the direction search. Clearly this is unacceptable, so the next step is to find cheap ways to search for the direction. The divisions and square roots should be easy to get rid of, but we need a little more than that, possibly a coarser search with iterative refinement, or maybe even a completely different technique. One complication with this search is that it runs in the decoder, which implies that every Daala implementation will have to produce bit-exact results for the search. On the plus side, the algorithm is fundamentally infinitely parallel, all computations can be made independently on all blocks, so we can easily use all the CPUs in the machine. Even GPUs should be able to handle this.

So the next step is to find a fast direction search algorithm. This will

decide on whether the paint post-processing filter is viable or not.

Suggestions and (especially) working code are welcome. The current code is

in the exp_paint_deringing branch of

my personal Daala repository.